This was another landmark week. I was finally able to get

navigation meshes working with all the behaviors I’d implemented so far. To see

how important this is, let’s consider an example:

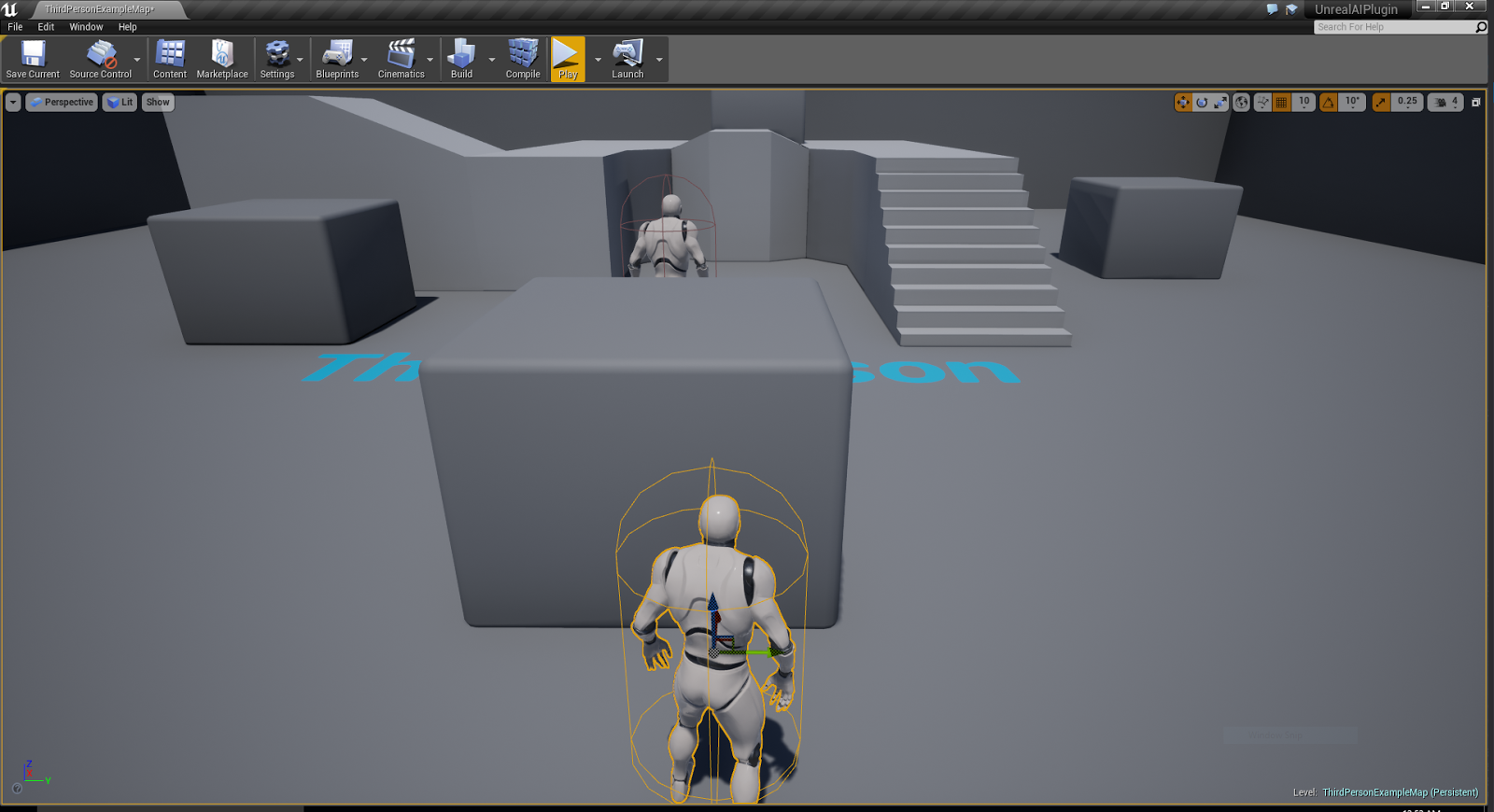

The follower (the smaller model) needs to get to the target position

(the other character in this case). However, there is an obstacle in between.

The steering algorithm doesn’t know anything about obstacles. So it will

continually push the follower towards the actor, and into the obstacle.

With a navmesh, we can tell the steering algorithms to

follow a sequence of points in order to reach the target actor. Now,

calculating this every frame is very expensive. So what do we do here?

First, we raycast to the target position to see whether the

follower can reach there uninterrupted. If it can, fantastic. We just ask the

follower to seek to the target position. If there isn’t a direct path, we

request the navmesh to provide us with a set of points that we store, and then

seek to one by one.

What if the target is moving, though? We obviously can’t

recalculate the navmesh every frame. What we do in this case is:

-

We store the moving actor.

-

We store the moving actor’s last position from

the previous frame.

-

We store the timestamp of the last path find and

-

We store the current path points from the last

path find.

Every frame, we check to see if the moving actor’s last

position has changed. If it hasn’t, we just continue following the last path

points that we’ve obtained. If the position has changed, however, we check to

see when the last pathfind was. If the last pathfind was done before a

threshold elapsed time, then we simply continue along those previous path points.

If, however, we have exceeded that threshold time, we clear the path points and

do a new pathfind.

This threshold time is tweakable, and is currently set at

0.5 seconds. Performing a pathfind every 0.5 seconds seems reasonable (for one

actor over a short distance) – and I haven’t seen the framerate take a hit.

Once we have all these points, we simply seek to them in order – removing them from the path points when we reach them. For the very last point, instead of seeking to it, we arrive to it, thus giving it a pretty smooth and natural feel.

One of the great consequences of this hybrid approach is that when the target character jumps up on ledges, the follower will go around and take the stairs to get to the height that he is. However, when the target character jumps off the ledge, the follower will jump off too! This is because we ignore height during raycasts.

I added to that some simple distance detection – now the

follower will hide if she is near enough to the piloted actor – and if the

actor goes further than some distance away, the follower will catch up with the

piloted actor. Very rudimentary gameplay, but massive stepping stones.

Hopefully we will have more, and better, gameplay next week.

I’ll probably work on making some gameplay systems classes and organize the

code a little better.

Find the source code here: https://github.com/GTAddict/UnrealAIPlugin/

Find the source code here: https://github.com/GTAddict/UnrealAIPlugin/

Comments